AI and your medical data: What you need to know to stay private

It’s Monday morning, and you’re staring at three years of lab results, prescriptions, and insurance papers clogging your inbox. To sort them, you consider uploading files to consumer AI like Claude or ChatGPT.

You might even subscribe to the paid plan, thinking it offers security. But you are handing over personal data to an uncontrolled environment.

Hospitals have dedicated teams to manage patient data, but when you manage your own, you have no such protection. The laws built for large institutions offer no shield for you at home, and the lack of legal protection exposes your most sensitive information to risk the moment you press that 'upload' button.

The law that doesn't protect you

American health privacy relies on the Health Insurance Portability and Accountability Act (HIPAA), passed in 1996 to address insurance portability. Cloud services and instant data transfer were not considered.

Then you had the Privacy Rule in 2003 and the Security Rule in 2005. These were made for hospitals. Big institutions. Big systems. They kept everything inside strictly controlled channels. But they didn’t protect individuals managing personal records.

The HITECH Act in 2009 digitized hospital operations for efficiency. But it offered nothing to a person at home trying to manage their own records. No current law covers personal medical data uploaded to a consumer AI app. You can exercise caution, but the legal framework does not protect you.

You are trusting a global platform, which is not a HIPAA-covered entity, with information defining your health history. Once health data leaves your control, its privacy is no longer guaranteed.

How others can inadvertently expose your health data?

Controlling your own uploads is insufficient; you can’t control what others upload about you. This is the source of the most significant, invisible risk.

Unintentional exposure through your network

Your health information is exposed inadvertently by family members, someone in billing, anyone. They upload something to an AI app and your information goes with it. Researchers call this “shadow AI”, unauthorized use of consumer AI tools for professional tasks.

In 2025, 70% of healthcare workers used personal AI for work, often unaware they risked patient data.

If your spouse summarizes a complex family medical record, your details are included.

If a receptionist drafts your visit notes in ChatGPT, your information is stored in an uncontrolled system.

If billing employees use an AI app to sort claims, your data ends up on hidden servers.

This is your health information moving without your consent, entirely invisible to you.

Consumer tools lack legal mandate

Generative AI is everywhere, used by friends and coworkers to organize documents. But people usually ignore the fact that consumer AI tools have no legal obligation to protect health information. They can log, store, or accidentally leak it. Once files enter an unsecured system, data can leak through caches, logs, or training data pipelines without notice.

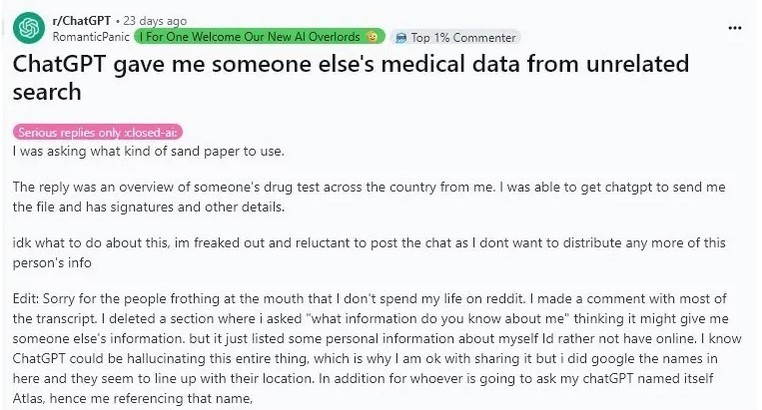

In July 2025, a Reddit user searching for woodworking sandpaper received another person’s LabCorp drug test results, complete with names and medical data.

Screenshot of the Reddit post. Source: Medium

If another person's sensitive results can appear in your chat, yours can appear in theirs.

The technical risks to your health data integrity

The problem isn't just chat logs leaking. AI models have three major technical dangers:

Data memorization: Advanced AI models, especially Large Language Models (LLMs), can literally remember specific lines of data they were trained on. If your record was fed into the system, an attacker can use a simple trick, we call it an "training data extraction attack", to force the model to spit out your personal health information (PHI) from its memory. And this risk is real even if the data was supposedly made anonymous.

Algorithmic bias: AI models carry biases from their training data. If the model analyzes your history, existing biases (say, towards certain demographics or conditions) could lead to inaccurate summaries or risk estimations. This creates an integrity risk. Inaccurate, AI-generated content can compromise your official medical documentation and affect the quality of your future care.

De-identification failure: Just removing names isn't enough. AI systems are excellent at connecting the dots. They can cross-reference little fragments, like your age, a rare condition, and your town, across different data sets. The same efficiency that makes AI useful also makes it a powerful tool for putting an identity back on an anonymous record. It raises the bar for safe data privacy far beyond what current public platforms can promise.

Surrounding systems and the chain of trust

Third-party vendors who support the primary AI platform further compromise your control over your data. Risk is present throughout the AI's whole service chain, not just within it.

In late 2025, OpenAI reported a security incident involving Mixpanel, a third-party analytics service for API dashboards. While chats were reportedly safe, attackers accessed personal details like names, emails, locations, and account IDs. When medical files go to a consumer platform, you trust a sprawling network of external vendors you never see or approve. Your sensitive health data demands stronger boundaries and a distinct, secure architecture.

You need to control your health data

The problem isn't using a smart tool to manage your records. The problem is where you put your records. When you upload files to a consumer app, you surrender ownership and control of your most personal information.

To truly protect your sensitive health records, you need to change how you think about them. You need absolute ownership. This means:

Your files stay locked: Your records must be fully encrypted. The company running the tool shouldn't have easy access.

No outside access: The tool that organizes your files must run in a secure, private place. No one outside should be able to sneak in and grab them.

See every step: You must be able to see a log of every time the tool used your data or opened your files.

Other businesses, like financial advisors, use these strong security rules to protect money data. Your health records demand the exact same level of safety.

MyVault: a secure way to manage your files

MyVault is built on these secure rules. Your files never leave your control. And they are never uploaded to risky outside AI tools.

When you connect your email or cloud storage, your data stays encrypted. We keep the digital keys for the files very safe. MyVault uses smart tools to organize your records and find things, but they only work inside a private, secure area that we manage for you.

With MyVault, you can manage your life, find old medical bills, and share documents with others only when you allow it. You can also stop sharing those files anytime. You see every action the tool takes. You control everything. Nothing is exposed without your permission.

Related posts

Feb 3, 2026

Household systems that pay for themselves in hours

Mental load means remembering when car insurance renews, tracking which child needs new shoes, knowing the fridge filter expires next month. Every household runs on hundreds of small details that someone must hold in memory.

Sead Fadilpasic

Jan 27, 2026

The Habsburg effect: why your data just got more valuable

The European Habsburgs built an empire through strategic marriages, consolidating power by keeping bloodlines strictly within the family. Something similar is happening with AI right now.

Markos Symeonides

Jan 21, 2026

The superuser problem: why AI agents are 2026's “biggest insider threat”

The primary security issue with AI agents is their access.

Markos Symeonides