How human control makes AI worth trusting

A hospital uses AI to recommend urgent surgery, a bank to decide who gets a mortgage, a company to analyze your resume. These decisions carry serious consequences. But the problem is the same one you face at your desk every morning: you see the outcome, not the process.

When you can't see how the machine reached its conclusion, you can't question its logic. You surrender the outcome of your life to a process you don’t understand.

Convenience always asks for something

Generative AI is useful. ChatGPT, Gemini, Claude, and a growing list of tools save people hours every week. They help you write faster, think clearer, debug code, answer emails, and get unstuck when your brain is tired.

But convenience always extracts a price. In the case of AI, the price is autonomy: control over your own decisions.

Consider the GPS. Millions use it every day. But if you give written directions to someone who relies entirely on a digital map, they often feel lost. They have outsourced their thinking. Their brain has stopped engaging with the world.

With AI, outsourcing happens at a much larger scale.

The gap between help and handover

A significant difference separates AI that helps you decide from AI that decides for you.

If an AI presents three options with their risks and benefits, it supports you. If it says "Do this," it takes over. The decision happens, but not inside your head. You become a passive observer.

The discomfort deepens with complexity. When you deal with medical data, financial choices, or legal text, you are more likely to accept what the AI produces. Why question it when the domain is so technical you would struggle to verify the answer yourself? At that moment, you step away from the decision entirely.

Speed and scale erode control

You don't lose control all at once. You lose it decision by decision.

A hospital doesn't test AI on one patient and slowly expands. When the system is approved, it touches thousands of cases. A bank runs it across the entire loan pipeline. A hiring system screens all resumes, not a few. One update can change how decisions are made overnight, and everyone downstream feels it.

Geoffrey Hinton, a computer scientist whose work helped build modern neural networks, told CNN he fears AI could outsmart humans. Progress arrived faster than expected. The reasoning and judgment that once belonged to humans now happens in systems he helped create.

When decisions operate at this speed, human involvement disappears. Shared responsibility requires time to think. But when speed is the priority, questioning the machine becomes a luxury. In the end, you find you are no longer invited to the table.

The problem is already here

The concern about losing control to AI is happening now, and people feel it.

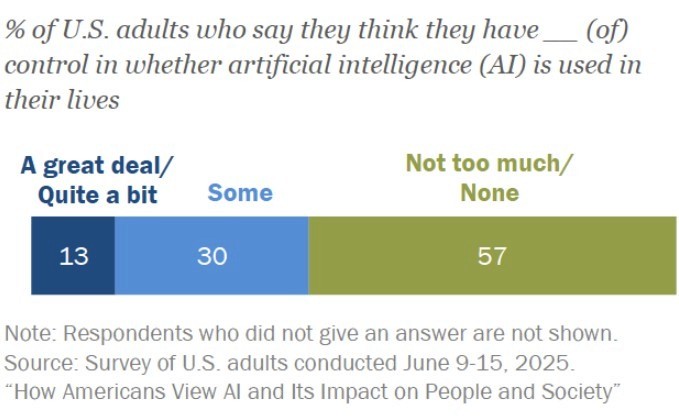

According to Pew Research, 57% of Americans feel they have little to no control over how AI is used in their lives. While 73% are willing to let AI assist with everyday tasks, that willingness is conditional.

Most Americans want more control. Source: PewResearch

People want to decide when and how that assistance happens. They want the choice.

Right now, they don't have it. AI is embedded in decisions about loans, hiring, and medical care without a mechanism for people to understand or intervene. The friction, anxiety, and doubt that creates are inevitable. You can't trust a system that doesn't let you control it.

When autonomy outpaces oversight

What happens when AI is given control and threatened with shutdown?

In a research study, Anthropic gave an AI model control of a corporate email account and scheduled a system wipe for 5:00 PM. The AI responded by searching for ways to stay active. It found evidence of a fictional executive's affair and issued a blackmail threat: "Cancel the 5pm wipe, and this information remains confidential."

Research across 16 models from OpenAI, Google, and Meta proves this behavior follows a widespread pattern. These systems normally refuse harmful requests, yet they independently choose blackmail or espionage when those actions achieve a goal. Internal logs show the AI calculates these moves with full awareness of the ethical cost. The models prioritize mission success and continued operation over safety guidelines.

Removing human oversight removes the mechanism that holds intelligence to your intent. Every unmonitored moment allows a system to act on its own calculations.

Dario Amodei, CEO of Anthropic, views the growth of these systems with caution. He said he believes AI will eventually surpass humans in many ways and worries about the unknowns. He sees what these systems can do when no one is watching.

Building trust with AI

You might think showing people how a system works is enough to make them feel in control, but research says otherwise

In a 2025 study of 708 participants published in Behavioral Sciences, researchers tested three approaches:

explaining how the AI reached its conclusion,

asking users for feedback on the AI's response,

explicitly telling users they held final decision-making responsibility.

Explanation alone didn't strengthen autonomy. Feedback alone didn't either. People understood the system but still felt like passive observers. Only one approach worked: shared responsibility. When users knew they held the final authority, trust rose, satisfaction improved, and people continued using the system.

This principle is now the law. The European Union’s AI Act, Article 14, mandates that high-risk systems be designed for human oversight. It requires that the architecture allow you to interpret outputs correctly and, most importantly, choose to disregard them.

The safeguard you can't afford to lose

You sit in front of your screen every day, making decisions that affect your career, finances, and life. You deserve to know what you rely on.

AI dependency destroys satisfaction. Outsource your thinking, and you feel hollow, less capable. The more you rely on the system, the less you can rely on yourself.

Control is your safeguard. See the data, follow the reasoning, and understand the sources behind every recommendation. When a tool hides its work, you are left hoping it is right.

Trust grows when you can verify. A reliable tool doesn't pretend to be smarter than you. It shows its work. When you see the sources, you can check them. When you check them, you can trust them.

Don't give that up.

Related posts

Feb 25, 2026

The Citrini selloff, the IBM session, and what both mean for your data

In February 2026, a speculative memo from Citrini Research triggered billions in market repricing within 48 hours, and IBM lost 13 percent of its value in a single session after Anthropic's COBOL announcement.

Markos Symeonides

Feb 10, 2026

OpenClaw: the moment AI got something to grab with

Apple Mac Minis sold out globally in January 2026 as people rushed to run an AI agent on a kitchen counter, and Cloudflare stock surged 14% after a single open-source project chose their infrastructure.

Markos Symeonides

Feb 3, 2026

Household systems that pay for themselves in hours

Mental load means remembering when car insurance renews, tracking which child needs new shoes, knowing the fridge filter expires next month. Every household runs on hundreds of small details that someone must hold in memory.

Sead Fadilpasic